My company, VIPAAR, made the cover of the Birmingham Business Journal. That is all.

My company, VIPAAR, made the cover of the Birmingham Business Journal. That is all.

Using Open Trip Planner, I have setup a website for riders of the BJCTA Max Bus in Birmingham, AL to plan out their trips. Â I only have a few of the routes online right now. More routes will be available as their stops are cataloged. Please help test the existing routes and verify the times and locations! You can find the trip planner site below:

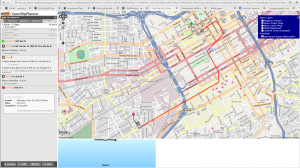

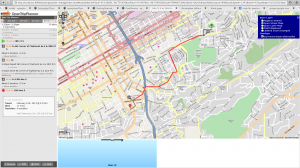

Continuing on with my project to get Birmingham’s MAX bus system on Google Transit, I was able to get the Open Trip Planner software up and running. This is letting me visualize some of the preliminary GTFS data to validate it before I continue work on the rest of the routes.  Here’s two examples showing trip planning between various locations. The stop locations are coming from my stop cataloging site.

Birmingham’s bus system, the MAX, still isn’t up on Google Transit. As a bus rider, I have found this to be a huge hurdle in getting more riders. After four years of their website having a coming soon, and still no trip planner in site, I’ve decided to take on the project myself.

I started off looking for some free programs to create GTFS data. I was unable to find what I needed as they all assumed you already had a database of your stops, and just need to convert the data. Â Unfortunately, the BJCTA does not publicly publish their stop locations, and I’m convinced they don’t even know where their stops are located. This meant I needed to catalog all the stops too.

I’ve started work on software to help me with this project. The project consists of two parts:

You can find my website for cataloging stop locations here. This site is designed so that anytime you see a bus stop, take a picture of it with a GPS enabled phone, and upload it. This gives me the location of the stop, plus I can see which routes pass through that stop. So far, with the help of the community, I have cataloged over 800 stops. I am estimating there are about 2500 stops in Birmingham, so we still have a long ways to go.

As the community helps me catalog stops, I am also developing an application that takes those stops, builds routes, creates time tables, and generates all the GTFS data. This program has been developed for me, so it is still very rough around the edges, and only implements what I need, but I have released the source, so you can branch it and use it. It currently only runs on linux, I’m running it under Fedora 18. If you want to branch the code, you’ll need the bzr tool.

bzr branch http://bzr.line72.net/subte/master subte-master

Hopefully, in the next few months we’ll have finished cataloging all the stops and will be able to begin beta testing the trip planner on Google Transit! If you are interested in helping out, please visit the above site and start cataloging. Every picture helps!

Click that ‘hood is a fun site developed by Code for America where you test your knowledge of the neighborhoods in your city. Being an activist for Birmingham, along with my fondness of maps, I decided I would get Birmingham up on their site. After all, they just need some GIS data formatted properly. No problem, I’ve worked with GIS data plenty of times before!

Unfortunately when it comes to GIS data, neighborhood boundaries are difficult to come by. This is probably because neighborhood boundaries are fluid and aren’t completely defined like political boundaries. The most popular site for neighborhood boundaries, Zillow.com, didn’t have any available for Birmingham. I kept searching and came upon Birmingham’s Map Portal. This site has some great data on it, including all the neighborhoods. It was just what I was looking for, except, you can’t actually export any GIS data off of it. You can only view it and take screen shots. (It sounds like it’s time to get an open GIS site running for Birmingham… maybe my next project?)

I had images of the neighborhood data now, but this meant I still had to draw the vector data to overlay on a map. Using QGIS, openstreetmaps, and finally Google Maps, I traced out each of the neighborhood boundaries as vector data. I have it available in both KML and GeoJSON formats:

You can also view my Google Map of the neighborhoods.

With that, I was able to upload the data to Click that ‘hood. So, go play and see how well you know Birmingham’s neighborhoods!

*Edit* I have updated this to no longer require you to edit xorg.conf. This also fixes issues if the touchscreen’s usb cable is hotplugged while X is already running.

I recently purchased an ELO 1537L 15-inch open-frame touchmonitor for a project I am doing at work. Â I have successfully gotten the touchscreen monitor to work under linux (specifically Scientific 6.x) using USB (I haven’t tried the serial interface). Â Plugging in the monitor, it is recognized as a 5020 Surface Capacitive:

19746:Aug 3 02:51:13 localhost kernel: usb 2-1: Product: Elo TouchSystems Surface Capacitive 5020

19747:Aug 3 02:51:13 localhost kernel: usb 2-1: Manufacturer: Elo TouchSystems

19750:Aug 3 02:51:13 localhost kernel: input: Elo TouchSystems Elo TouchSystems Surface Capacitive 5020 as /devices/pci0000:00/0000:00:1d.0/usb2/2-1/2-1:1.0/input/input7

19751:Aug 3 02:51:13 localhost kernel: generic-usb 0003:04E7:0042.0003: input,hidraw2: USB HID v1.11 Pointer [Elo TouchSystems Elo TouchSystems Surface Capacitive 5020] on usb-0000:00:1d.0-1/input0

ELO provides some generic drivers for this device. I first attempted to directly use them and found them to be a complete disaster. The whole configuration was really silly (putting stuff into /etc/opt, are you kidding me?). The elo daemon constantly hung and had to be restarted. Restarting X caused the daemon to stop working, thus the touchscreen stopped working.

I quickly removed these drivers and tried it with the evtouch drivers which I have used for a USB displaylink touchscreen monitor in the past (MIMO). With a few changes to my xorg.conf, the evtouch driver immediately recognized it and I was able to capture touch events. Although the calibration was initially completely off.

Here’s the steps I took to get this working on Scientific Linux 6.0

Unfortunately, Scientific Linux does not come with the evtouch driver. I have built a 64-bit rpm for Scientific Linux here . If you need a 32-bit version or for another platform (Fedora), download the src rpm and rebuild it (rpmbuild –rebuild xorg-x11-drv-evtouch-0.8.8-1.el6.src.rpm).

It is not required to directly edit xorg.conf. Instead, we will create a hal fdi file

We will create an fdi file in /etc/hal/fdi/policy called elo_touchscreen.fdi

/etc/hal/fdi/policy/elo_touchscreen.fdi

<?xml version="1.0" encoding="ISO-8859-1"?>

<deviceinfo version="0.2">

<device>

<match key="input.product" contains="Elo TouchSystems, Inc. Elo TouchSystems Surface Capacitive 5010">

<merge key="input.x11_driver" type="string">evtouch</merge>

<merge key="input.x11_options.MinX" type="string">3724</merge>

<merge key="input.x11_options.MaxX" type="string">318</merge>

<merge key="input.x11_options.MinY" type="string">3724</merge>

<merge key="input.x11_options.MaxY" type="string">318</merge>

<merge key="input.x11_options.SwapX" type="string">true</merge>

<merge key="input.x11_options.SwapY" type="string">true</merge>

</match>

</device>

</deviceinfo>

If your monitor is slightly different, you will need to get the product id, and replace the match key=”input.product” line in the above file.

$ lshal | grep input.product

input.product = 'Sleep Button' (string)

input.product = 'Power Button' (string)

input.product = 'Macintosh mouse button emulation' (string)

input.product = 'ImExPS/2 Generic Explorer Mouse' (string)

input.product = 'AT Translated Set 2 keyboard' (string)

input.product = 'Elo TouchSystems, Inc. Elo TouchSystems Surface Capacitive 5010' (string)

You should now be able to unplug and plug your touchscreen back in and have it work without restarting X

The MinX,MinY,MaxX,MaxY values are used for calibrating the touchscreen. The evtouch source available on their site comes with a calibration utility. However, I was unable to get this to run. For me I played with the MinX, MaxX, MinY, MaxY values in my xorg.conf until it was close enough. As you can see, I had to mirror both the X and Y values.

I noticed that Scientific Linux also includes an elographics package: xorg-x11-drv-elographics. I have no idea if this works better or not although I have heard they only work with the serial interface. I have it working with evtouch, so I’m happy. If anyone has tried the elographics and had success, please comment!

The Gnome desktop recently release version 3 of their desktop, which includes their all new Gnome Shell. I have been using it for several months now, and I must say I really like the direction it is going. It is still early and is missing a lot of little things, but those will come soon. We are starting to see new extensions being built for it to extend the functionality.

One feature I have found blatantly missing is the ability to search active windows in the overview. In overview mode, you first see a live preview of all your windows. But if you’re like me, you have 15 terminals and 10 web browser windows up (I despise tabs!). Typing starts a search, which by default searches: your installed applications to quickly start one, files, and places. Search is completely missing the ability to search through open windows based on their title!

I quickly wrote my first gnome-shell extension to do just that. It is still an early version, and I would like to update it to add more features such as showing a live window preview instead of just the application icon.

Try it out, and send me your thoughts:

gnome-shell-extensions-window-search-0.0.1

You can use the gnome-tweak-tool to install it, or extract it into

$HOME/.local/share/gnome-shell/extensions

I purchased a Genius G-Pen F350 for cheap last week. Â I am working on translating a book from Chinese, and need to look up characters. The quickest way to do this is to draw the character and use handwriting recognition software such as tegaki. My mousing skills are subpar, so I though a tablet would help. Â I picked the Genius for several reasons: it was cheap, it was thin so I can carry it to chinese class, it’s supposed to work under Linux.

Unfortunately, this table does not work out of the box on Ubuntu 9.10 (Karmic). Â Plugging it in recognizes it as a mouse which can be controlled with the pen. Â Unfortunately, none of the buttons work, and the tablet isn’t relative to the screen (i.e., if you touch the upper left part of the tablet, the mouse should jump to the upper left part of your screen). Â After digging around, I have finally been able to get this to work satisfactorally. Â Some things are not working, such as the buttons or all the shortcuts, but for my needs, it works well. Â Here’s the steps I took:

There are two ways to do this:

$ tar zxvf wizardpen-0.7.0-alpha2.tar.gz

$ cd wizardpen-0.7.0-alpha2

$ sudo aptitude install xutils libx11-dev libxext-dev build-essential xautomation xinput xserver-xorg-dev$ ./configure --with-xorg-module-dir=/usr/lib/xorg/modules

$ make$ sudo make installThe install should have copied a file called 99-x11-wizardpen.fdi into /etc/hal/fdi/policy/. You will need to edit this file with your favorite text editor and change a few things. For example, in mine, I needed to change the info.product line to WALTOP International Corp. Slim Tablet. I got the name from the output of grep -i name /proc/bus/input/devices:

$ grep -i name /proc/bus/input/devices

N: Name="Lid Switch"

N: Name="Power Button"

N: Name="Sleep Button"

N: Name="Macintosh mouse button emulation"

N: Name="AT Translated Set 2 keyboard"

N: Name="Video Bus"

N: Name="Logitech Optical USB Mouse"

N: Name="DualPoint Stick"

N: Name="AlpsPS/2 ALPS DualPoint TouchPad"

N: Name="Dell WMI hotkeys"

N: Name="HDA Intel Mic at Ext Left Jack"

N: Name="HDA Intel HP Out at Ext Left Jack"

N: Name="WALTOP International Corp. Slim Tablet"

Save this file, then unplug and replug in your tablet. The new settings should be picked up immediately. You will probably also need to change the TopX, TopY, BottomX, and BottomY values. Please see the next section on calibration.

Hopefully at this point your tablet is basically working. However, for it to be useful, it needs to be calibrated. You can try to guess on these values or you can use the calibration tool that came in the wizardpen-0.7.0-alpha2.tar.gz package from above (it is not included in the .deb!). Extract the source archive and go into the calibrate folder. There should already be a wizardpen-calibrate executable. If not, run make to build it.

To calibrate your device, run:

$ sudo ./wizardpen-calibrate /dev/input/event6

You may need to replace /dev/input/event6 with the event your tablet is on. You can figure this out by running:

$ ls -l /dev/input/by-id

total 0

lrwxrwxrwx 1 root root 9 2010-01-06 10:56 usb-Logitech_Optical_USB_Mouse-event-mouse -> ../event7

lrwxrwxrwx 1 root root 9 2010-01-06 10:56 usb-Logitech_Optical_USB_Mouse-mouse -> ../mouse2

lrwxrwxrwx 1 root root 9 2010-01-06 11:25 usb-WALTOP_International_Corp._Slim_Tablet-event-if00 -> ../event6

As you can see, my tablet points to event6. Follow the directions of the calibration tool, and it will give you the TopX, TopY, BottomX, and BottomY values you need to replace in your 99-x11-wizardpen.fdi

The buttons on mine did not work, and it is by default way to sensitive. By changing the pressure, you can specify how hard you must push down before it pushes the left mouse button. This means you can lightly drag the pen and it will just move the mouse. But if you push down harder, it will push and hold the left mouse button down. You can change this by adding the following to your 99-x11-wizardpen.fdi (make sure you add it next to the other lines starting with merge)

<merge key="input.x11_options.TopZ" type="string">512</merge>

Valid values are 0 to 1024. The higher the value, the more you need to push down before the left mouse button activates. I found 512 to be an acceptable value. However, if you are trying to do pressure sensitive drawing, this may not be ideal.

My entire /etc/hal/fdi/policy/99-x11-wizardpen.fdi looks like:

<?xml version="1.0" encoding="ISO-8859-1" ?>

<deviceinfo version="0.2">

<device>

<!-- This MUST match with the name of your tablet -->

<match key="info.product" contains="WALTOP International Corp. Slim Tablet">

<merge key="input.x11_driver" type="string">wizardpen</merge>

<merge key="input.x11_options.SendCoreEvents" type="string">true</merge>

<merge key="input.x11_options.TopZ" type="string">512</merge>

<merge key="input.x11_options.TopX" type="string">573</merge>

<merge key="input.x11_options.TopY" type="string">573</merge>

<merge key="input.x11_options.BottomX" type="string">9941</merge>

<merge key="input.x11_options.BottomY" type="string">5772</merge>

<merge key="input.x11_options.MaxX" type="string">9941</merge>

<merge key="input.x11_options.MaxY" type="string">5772</merge>

</match>

</device>

</deviceinfo>

Automake and I have been friends for a long time. Â We’ve loved, we’ve laughed, we’ve cried … A lot! Â Automake is the de facto build system on unix (especially linux) systems. Â I use it in almost all my projects. Â Automake isn’t too hard once you stop copying someone else’s Makefile.am’s and configure.ac’s and actually take a few minutes to learn what’s going on. Â Although lack of documentation and that horrible language called m4 is a huge turn off. Â Although I really like the way automake feels, I’ve felt that it needs to be modernized with a simpler syntax, more cohesive tools, and a decent scripting language. Â This and the fact that it doesn’t play nicely under windows (unless you want to build with gcc in cygwin or cross-compile), has left me looking for something new.

2 years ago when I changed direction and started working on different projects, our regular windows builds ceased. Â I somehow had become the official builder + releaser guy in our lab. Â Every once in a while we’d need to get a new development build out for a windows user. Â I’d have to step in, remember how everything worked, fight the system, and eventually come up with a build.

A long time ago, I had everything cross compiling in a chroot environment. Â This was nice since I had a shell script which would do everything for me, except the final freezing of python which had to be done in windows. Â Over the years the dependencies in the chroot environment became out of date, and things stopped building. Â I was busy and never updated the machine, and eventually it was re-purposed for something else.

Then came the windows vmware image with mingw setup (we didn’t need to depend on cygwin for any posix stuff). Â This was okay, but more difficult to script, and became a much more manual process which also became a constant update headache.

Recently we wanted to stop building under gcc and move to using visual studio’s compiler. Â The build system had stopped working and we were running into issues when creating our python modules. Â Automake + libtool would not play nicely with Visual Studio’s compiler, so I started looking at alternatives.

After some initial testing, I decided to move our projects over to SCons. Â SCons has made some things very easy, like building with Visual Studio’s compiler and handling SWIG. Â However, SCons is contantly doing things to annoy me. Â Here’s my list of personal annoyances:

There are plenty of good things about SCons. Â I really like working in python, and it’s nice that it works well in windows and unix. Â Overall, SCons is mostly working for us, but I am in no way satisfied with it. Â I suppose someday I’ll have to write my own build system.

I’m mainly posting this so I won’t lose the link (what are bookmarks?). Excellent reference for shared libraries under linux.