Git is an incredibly powerful tool, but often times very obtuse. There are many different types of work flows you can use with git, but one that I use often is having a master branch where all new development happens, and having a maintenance branch, where certain fixes get backported for a new stable release. I have found that if you don’t do things in a certain way, backporting changes can be a very tedious task.

For my work flow, anytime new work is going to begin on a feature or a bug, it is always done in a new feature branch created from master. In my work flow, that branch is always tied to a ticket (that could be a github issue or a jira ticket) that explains the details of the fix. Each branch fixes or adds one and only one thing. Doing this will make backporting specific fixes or features so much easier. Once this feature branch is completed, it is then merged into master. I ALWAYS merge using the –no-commit and –no-ff options. This is for several reasons:

$ git merge --no-commit --no-ff issue-23

- I always want a single commit that represents the merge of the entire branch. When backporting, I will be able to specifying this single commit and it will bring in all the associated commits with it

- It keeps my top level git log clean. Running git log –first-parent will only show my merge commits, not every single commit that was ever made.

- It gives me a chance to update the ChangeLog appropriately and to write a standard commit message, like “Merged Issue 23 – Added new feature blah”

Let’s look at an example. At some point, I decided master was ready for a 1.0.0 release. I tagged this version, and then created a new maintenance branch from master called 1.0.x

$ git checkout -b 1.0.x master

Development will continue on in the master branch, but any critical bug fixes will get back ported from master into the 1.0.x branch, where I will then make new stable releases of 1.0.1, 1.0.2, …

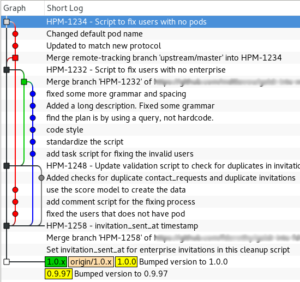

If we take a look at the following log of master, you will see there have been several top level commits into master merging in various feature branches. Each of the commits has lots of subcommits that made up that feature branch.

If we look at the following, the very bottom commit shows where master and the 1.0.x branch diverged. Looking at master, we can see we have 4 merge commits. Each of these branches that were merged in contain subcommits:

- HPM-1258 – invitation_sent_at timestamp

- HPM-1248 – Update validation script to check for duplicates in invitations and contact_requests

- HPM-1232 – Script to fix users with no enterprise

- HPM-1234 – Script to fix users with no pods

Let’s say that HPM-1234 is the change that we want to merge in. This should bring in every single commit along the red line. This would be very tedious if we had to backport all six individual commits in that branch. That is why we merged in this branch into master using the –no-ff –no-commit options, so we could guarantee to create a single merge commit representing all of them.

Normally, in git, if we wanted to merge in HPM-1234, we would do a git merge 04145ec where 04145ec is the sha1 change. But in git, this will merge every change up to and including HPM-1234. So this will also merge in HPM-1232, HPM-1248, and HPM-1258, which is not what we want!

Git does have a special command for just picking out a single change: cherry-pick. Cherry picking allows us to just pull out a specific commit and merge it into a separate branch. Unfortunately, cherry-pick doesn’t handle scenarios well where a subcommit is a merge. If you look in our example, we can see along our red graph, where master was merged back into our branch. This is going to confuse the cherry-pick command. For the cherry-pick command to work, we will have to manually specific every single commit in the red branch one at a time, instead of just being able to specify the merge commit. And we will either have to skip over the merge commit, or handle it specially using the -m option (depending on what was merged in)

Instead of cherry-pick, we can instead generate a series of patches, then reapply them. We will do this through the use of two commands: format-patch and am.

There is an issue with doing this. Git loses some of the history, so the relationship of this merge between master and the 1.0.x branch is lost. However, for our case of backporting, this is acceptable.

Each one of the commits in the red graph will come into our maintenance branch as a separate commit. This is not exactly what we want, I would rather have a single top level merge commit in my 1.0.x. Therefore, I will create a new staging branch from the 1.0.x, backport the changes, then merge the staging branch into my 1.0.x branch. Here are the steps:

# create a new staging branch from # the 1.0.x branch $ git checkout -b backport-hpm-1234 1.0.x # # We will now run format-patch then pipe # the output directly into am. This will # create the patches and apply them in a # single step. We will tell git to just # use our top level commit $ git format-patch -n --stdout 04145ec^..04145ec | git am Applying: fixed the users that does not have a pod Applying: ... ...

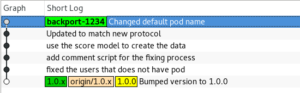

Now we our staging branch looks like the following:

Now we can go back to our maintenance 1.0.x branch, and merge in our staging branch using the –no-commit and –no-ff options so that we can create a custom merge commit:

$ git checkout 1.0.x $ git merge --no-commit --no-ff backport-hpm-1234 # # We can update our ChangeLog or do anything else, #Â then commit $ git commit -m "Backported HPM-1234 - Script to fix users with no pods"

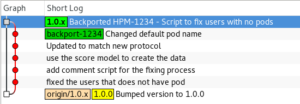

Our 1.0.x tree now looks like:

You can see we now have a single top level commit in our 1.0.x branch representing the HPM-1234 branch that was backported in.